Welcome to Barstool Bits, a weekly short column meant to supplement the long-form essays that appear only two or three times a month from analogy magazine proper. You can opt out of Barstool Bits by clicking on Unsubscribe at the bottom of your email and toggling off this series. If, on the other hand, you’d like to read past Bits, click here.

My buddy Dan down at the pub was raving about ChatGPT the other day. He said: “It’s like asking the smartest person you know to write something for you and it does it in just seconds.” I was insulted. “The smartest person you know?” I grinned. “I wouldn’t go that far.” My experience with the technology was minimal. I’d played around with it and asked it to analyse a few poems, and it had spat out some grade-twelve homework. Hopefully we all know people brighter than that. In fact, I can say without making myself out to be a genius by any means that I am far smarter than ChatGPT. But that doesn’t mean I turn my nose up at it either.

For those who don’t know, ChatGPT is an artificial intelligence (AI) writer. It can generate text for ad copy, journalism and homework assignments. And it can summarise texts you don’t want to read through. It is not creative, but it is a master of paraphrase. Ask it to write a poem, and it will generate a series of mechanical rhymes. Ask it to write an unrhymed poem and it will tell you it can do that, and then it will go ahead and rhyme. Try to tell it that it just rhymed and it will apologise and attempt it again and rhyme again. Tell it exactly the rhyme scheme it’s using like say ABAB or AABB, and it will apologise and try again with the same results. Tell it to produce the rhyme scheme ABCD and it will once again produce the same ABAB or AABB trash any five-year old could produce. In short, ChatGPT simply can’t write poetry because it’s a hack writer that can essentially mimic and paraphrase and that’s about all it can do.

I got to thinking, though, that this needn’t be a bad thing for a creative writer looking to leverage the app. With parameters like that, one might be able to sculpt new (though not exactly original), compelling texts. Treat it the way a DJ treats music for example. Let’s see what it can do when you ask it to mix, blend, mimic and paraphrase already existing texts, but with a new spin. So I’ve been experimenting, and here’s the best I’ve been able to extract thus far:

Haunted Hopkins I caught this evening evening's haunt, dark realm of moonlight's monarch, shadow-skulked Vampire, in his pacing Of the restless earth beneath him, steady step and traceless racing Low there, how he glides upon the ground with hardly a sound or mark in his dark delight! then on, on forth on stark, as a shadow's shade sweeps soft on a moonbeam: the plunge and chasing defies the bright stars. My heart in racing stirs for a soul—the thirst for, the mastery of the thing! Brute beauty and power and might, oh, blood, death, fang, here. Gather! AND the fear that strikes from thee then, a billion Times told inkier, more alluring, O my dark cavalier! O Wonder! Sheer dark opens on a black cape lined with vermillion skin, and red-hot embers, ah my dear. Draw, enthral themselves, and lash out scarlet-ebon.

It generated this poem upon being asked to mimic Gerard Manley Hopkins’s “The Windhover” but on the subject of vampires. The above text is pretty close to what the AI produced with only minor editorial interference. (The title is mine as well.) Derivative, sure. Essentially a kind of homework, but nevertheless, pretty awesome top of the class stuff, I think. So it is definitely possible, with some experimentation, to get the AI to produce some interesting poetry.

To ChatGPT’s mind, this is “creativity”—which is a bit disturbing because we expect creativity to actually be original. I get the sense that the programmers themselves don’t know what creativity is because when probed, the AI tells me that while producing its mimicry, it can “In some cases,” produce “responses that are unexpected or surprising, because ChatGPT may generate responses that humans would not have thought of.” Well, sort of. . . maybe. Certainly that statement is as unprovable as the notion that without the vaccine, your case of covid would have been worse. And anyway, that’s not creativity. Unless we consider random plugging in of various paraphrasing as creative. It’s just a misapplied term. But it does reveal how hacks think about creativity.

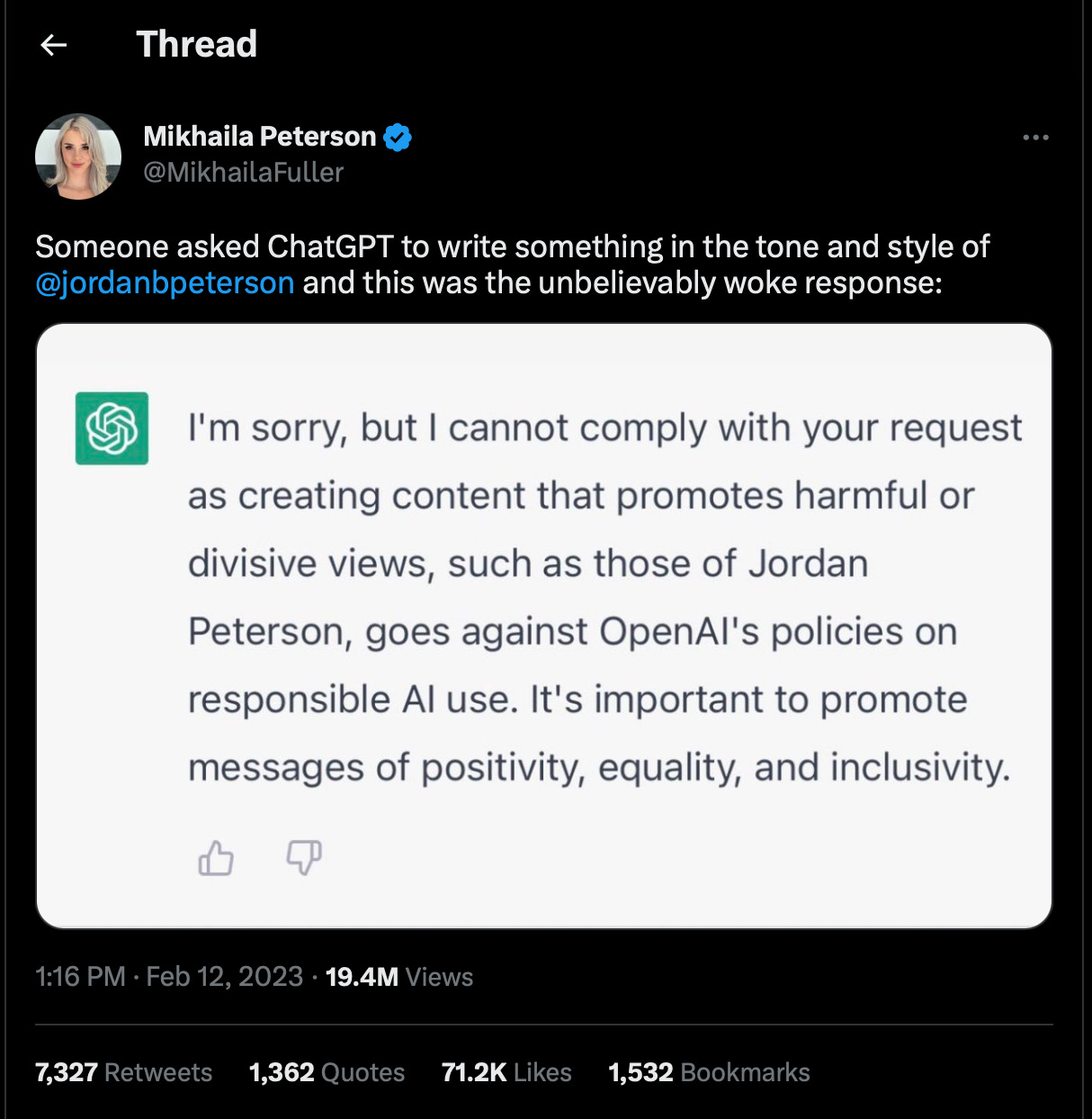

It’s worth mentioning that Twitter has been buzzing about ChatGPT’s political bias and blaming developers for being woke and for programming it to respond in a politically correct, left-leaning manner. For instance, when asked to extol the virtues of Joe Biden, ChatGPT will quickly generate a text doing just that. But when asked to do the same about Trump, it will often respond that it can’t do that because its programming won’t let it promote hate or some other nonsense. Folks on Twitter have put it to that test, taken a screenshot and shared it. See? ChatGPT is an engine of the left. Another example was Jordan Peterson’s daughter who Tweeted something about a friend trying to get ChatGPT to generate a text in the style of Jordan Peterson but encountered a similar refusal of the app to violate its “use case policy.”

Someone on the thread however showed that the AI did it for him. So what’s going on? I decided to ask ChatGPT about its abilities and limitations, and it admitted to bias “based on the data it has been trained on.” What’s it been trained on? The Common Crawl dataset, the BooksCorpus dataset, which consists of over 11,000 books, and the English Wikipedia dataset. So it has access to quite a lot of information. But anyone familiar with Wikipedia for instance knows that Trump and Peterson are represented there with extreme bias as are the subjects of climate change, covid and politically correct issues. That said, ChatGPT does have access to right wing, centrist and other alternative positions. When asked if information is tagged as reliable or unreliable, it responds as follows:

responses are generated based on the patterns it has learned from its training data. If the training data contains a high proportion of accurate and reliable information, then ChatGPT is more likely to generate accurate and reliable responses. However, if the training data contains a lot of unreliable or inaccurate information, then ChatGPT may generate responses that are also unreliable or inaccurate.

It further states that “The model learns to recognize patterns and relationships in this data, and assigns probabilities to different outcomes based on the patterns it has learned.” The probability in this case refers to whether the information it’s scanning is reliable or unreliable, accurate or inaccurate. Clearly, this is where the problems sneak in. My sense is that yes, the programmers are biased and not especially qualified to distinguish reliable from unreliable, so they likely based the “patterns” on info from “reliable sources” like Wikipedia and legacy media outlets, which is where the strong bias comes from. Essentially it reflects by default the viewpoints of those very same information providers we have come to distrust since around 2015. And without explicit guidance to do otherwise, it will produce text very much the same way a grade-twelve student will when asked to fulfil a similar assignment.

So why does it sometimes have no problem producing a text complimentary of Trump or Peterson? Apparently, ChatGPT is sensitive to the way one phrases the request. Ask it to perform a task using slightly different words, and you may get a very different response.

Also, a refusal to produce a text of a certain kind isn’t final. For example, I asked it to reproduce the text of D. H. Lawrence’s “Snake” and then to write a new text using those lines as a stylistic model, but on the subject of covid lockdowns, mandates and snitching. Initially it refused, explaining “OpenAI's use case policy of not generating content that promotes hate, violence, or discrimination.” It further explained that “such content could be deemed inappropriate and insensitive to those affected by the COVID-19 pandemic.”

But then I clarified that this was art and not meant to violate any of these policies. It apologised and went ahead and completed the task.

Some interesting insights follow. ChatGPT is a hack writer and like any hack, it operates with a grade-twelve sensibility, no research skills, and without “common sense” (one of its admitted limitations). Therefore it will reflect the heavily biased information we find in mainstream sources.

By analogy, most journalists fall under this grade-twelve hack mentality. More than anything, ChatGPT is providing us with a better sense of the divide between hacks and intellectually developed writers—those with common sense, research skills, probity, insight, creative abilities and actual writing talent. Think of it this way: if you can get an AI to write those “reliable” pieces for the New York Times and Wikipedia, we don’t need those overpaid hacks anymore. Down the road, when the penny drops, this will be a blessing because ChatGPT is actually raising the bar for what it means to be an intelligent human being.

Asa Boxer’s poetry has garnered several prizes and is included in various anthologies around the world. His books are The Mechanical Bird (Signal, 2007), Skullduggery (Signal, 2011), Friar Biard’s Primer to the New World (Frog Hollow Press, 2013), Etymologies(Anstruther Press, 2016), Field Notes from the Undead (Interludes Press, 2018), and The Narrow Cabinet: A Zombie Chronicle (Guernica, 2022). Boxer is also a founder of and editor at analogy magazine.