Welcome to Barstool Bits, a weekly short column meant to supplement the long-form essays that appear only once or twice a month from analogy magazine proper. You can opt out of Barstool Bits by clicking on Unsubscribe at the bottom of your email and toggling off this series. If, on the other hand, you’d like to read past Bits, click here.

The idea of statistical significance has played a vital role in confusing our sense of reality. For some reason, the concept is felt to be scientific despite its highly abstract quality. Moreover (as I will explain shortly), when we use statistical significance as an interpretive tool, it is always an indicator of uncertainty. Owing to its usefulness as a brain-baffling device, as well as to its promiscuous applicability, those managing the 2020-2023 lockdowns deployed the notion of statical significance to rationalise just about any decision, no matter how antidemocratic and unethical. In the area of health care and medicine, the problem with this metric has turned out to be acute and in urgent need of redress.

As I pointed out in “The Lab Leak Contagion Fraud,” the whole germ theory of illness and disease is pseudoscience. As Mike Stone has indicated in “The Germ Hypothesis Part 1,” the main issue with germ theory (and virology in particular) is that viruses were never proven to actually be a phenomenon: in other words they’ve never been proven to exist. Worse, the hypothesis has been falsified over and over again. Moreover, and this follows logically, they’ve never been proven to cause illness; another hypothesis that has been repeatedly falsified. All of this boils down to the scientific method being entirely abandoned by the field at its inception and to this very day.

Let’s review the basics of the scientific method. I found that Mike Stone used this approach very effectively, and I’d like readers to benefit from some of the same reminders. Let’s start with this quotation:

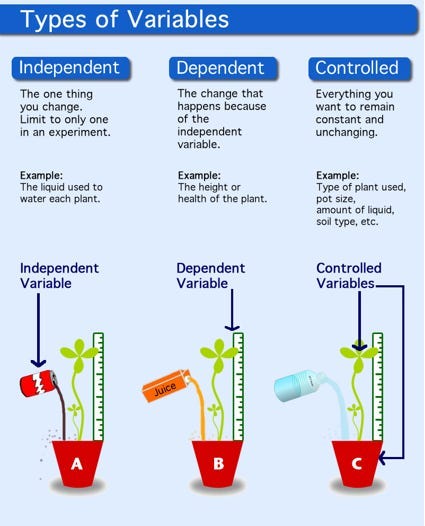

What this means is that a hypothesis must be designed and written in such a way as to “prove” whether or not a predicted relationship derived from the natural phenomenon exists between two variables: the independent variable (the presumed cause) and the dependent variable (the observed effect).

[All quotes are from the above-mentioned article from Mike Stone’s ViroLIEgy Newsletter Substack.]

Ideally, there should also be a control variable or even several controls. Here’s a handy infographic that Stone shared:

Stone proceeds to walk us through Science 101, since we’ve clearly forgotten the fundamentals:

Once the hypothesis has been established, a proper experiment can be designed in order to test it. According to American philosopher and historian of science Peter Machamer in his 2009 paper Phenomena, data and theories: a special issue of Synthese, the experiment should show us something important that occurs within the real world. The goal is to ensure that the aspects of the observed natural phenomenon that originally sparked the hypothesis are “caught” in the design of the experiment. In this way, the experiment will be able to tell us something about the world and the phenomena studied. Thus, it is critical that the hypothesis is tested properly through an experimental design that accurately reflects the observed natural phenomenon and what is seen in nature.

When every germ contagion experiment has proven that viral illnesses are not transmitted via proximity, breath, sputum particles, phlegm exchange, sweat, or blood. . . like. . . that should be it: you close the book on that hypothesis. Even if—and this is not the case, but an example of how exacting authentic science truly is—even if 9 out of 10 experiments confirm an hypothesis, it only takes one well-designed experiment to falsify it. In other words, even if contagion seemed to be conclusive in 9 out of 10 experiments, it would take only one well-designed experiment to falsify the rest. Let’s recall that scurvy was once considered a contagion.

Now imagine you put 10 people in a room of the sort you might find in most cafes, and you just let them behave as they would normally in that environment, but you put one with full blown flu symptoms in the room and then you followed them up after a few days to find out how many caught the flu. Even if 8 out of 9 got sick, that one case that didn’t get sick renders the whole experiment inconclusive.

Ah, but there’s immunity, you say. Well, that’s an interesting, even compelling, tale—I mean, the immunity hypothesis. I guess we’ll have to come up with an experiment to test that notion. We are now, however, two removes from reality, since we came up with the immunity concept to back up the unproven hypothesis that a tiny bogeyman is afoot. In fact, the notion of immunity to a virus is being used here to dismiss inconvenient data. We have now successfully invented a fictional world to support an initial fiction. We are no longer in the realm of science here: this is makebelieve, scientiphysized art.

To be clear, the numbers I’ve presented regarding viruses and contagion are inverted. In fact, all efforts to infect a human being or an animal with a virus by the means we claim contagion works—breathing, sneezing, coughing, touching each other, embracing, kissing, touching shared surfaces, sharing towels—have failed.

Let’s be rigorous with the application of our principles. If 1 or 2 out of 10 in a shared space did get sick, does that prove that so-called “contagion” is a thing—an actual phenomenon? In other words, with which side does the burden of proof lie? Important to keep in mind that it’s the hypothesised element that bears the burden to prove itself. Is there truly a wee dibuk jumping around and possessing hosts or is that just a neat idea that turned out upon experimentation to have been false? I don’t have to prove that dibuks don’t exist; if you believe in them, and no one’s ever seen one, then you’re the one who has to prove it.

My point is that statistically significant outcomes are not a scientific tool. They are, in fact, the reverse: the reasoning is counter-scientific; it’s a workaround to circumvent scientific rigour and to slip falsified ideas into our heads as TheScience™. Every time I examine how we misuse stats and probabilities, I find some new area of misapplication.

To give the notion its due, let’s see where the use of statistically significant outcomes might be appropriate. You give 100 people the same medicine, and 30 get better. Thirty-percent recovery might be an indicator. So you say, well, let’s try a placebo trial and compare. If the outcome is similar, you may decide, Hey, 30% wasn’t especially promising to begin with and this clinches the matter. That’s honest science. If, on the other hand, 70 patients recovered with the course of treatment, while only 30 in the placebo group. Now we’ve got something to work with. If the so-called “side effects” are minor, it’s worth a shot! What we’re doing here is applying pragmatic workarounds (heuristics) to navigate an area of uncertainty and to rationalise the application of a course of disease treatment.

What statistical significance cannot do is tell us whether a phenomenon exists or whether a given agent is causal. Indeed, in circumstances that call for certainty, one can be sure that the invocation of statistical significance indicates a falsified hypothesis.

Since individual biology is idiosyncratic, we expect inconclusive results when it comes to healing, especially owing to the so-called “placebo effect”. . . (which, let’s face it, ought to be the focus of medical science, as it’s clearly the key to human health). In other words, patient outcomes are unpredictable. So what do we do when something’s unpredictable? We turn to statistics and probabilities and dress up our ignorance in scientiphysizations; throw on a % or two and voila!—here’s some sciency chatter to distract you from the BS. But it ain’t science. It’s a method of working around the loss of authority that would come with having to admit flat out that you don’t know and cannot predict outcomes. Even the most narcissistic of MDs must admit, when pressed, that they really can’t promise anything (likely because insurers are finicky about lying to people). Nevertheless, our culture relates to Western medicine (pharmaceutical medicine) as though it were an exact science.

In simple language, you can’t declare that because something works sometimes or often enough that it’s going to work in any particular case. So we turn to stats and probabilities to help us make a choice in circumstances of uncertainty. That’s when it comes in handy; and that’s what it’s for. What statistical significance cannot do is tell us whether a phenomenon exists or whether a given agent is causal. Indeed, in circumstances that call for certainty, one can be sure that the invocation of statistical significance indicates a falsified hypothesis.

At this stage of the game, I’m not sure how our science culture might go about the serious house cleaning required. We need a renewed scepticism: a school of scientists whose job is to review all our so-called facts, every Nobel-Prize-winning idea, and to communicate their findings to the public. Let’s call the group the Special Committee for the Restoration of Science. In an ideal world, the committee would include folks with a solid understanding of analogy, rather than the usual dismissive ignorance of the subject we encounter in science literature today. The group would have two main goals: (1) to distinguish between what we actually know from what we don’t know with the purpose of overhauling our research priorities; and (2) to flag bias-confirmation practices and curb their usage.

As I see it, persons trained in literary studies (that is, folks familiar with fiction, story-telling, and poetics) could help cut through a lot of the sciency nonsense that arises from a lack of familiarity with this side of thinking and communication. Historians and philosophers could help a great deal as well on the special committee I have in mind. Unfortunately, with the corporate control of science, such a consideration is baseless fabric. For the time being, we’ll have to make do with being stuck in this dark age of sciency chimeras and the priestly fact checkers who let the dibuk loose upon us when the corporate barons signal.

Asa Boxer’s poetry has garnered several prizes and is included in various anthologies around the world. His books are The Mechanical Bird (Signal, 2007), Skullduggery (Signal, 2011), Friar Biard’s Primer to the New World (Frog Hollow Press, 2013), Etymologies (Anstruther Press, 2016), Field Notes from the Undead (Interludes Press, 2018) and The Narrow Cabinet: A Zombie Chronicle (Guernica, 2022). Boxer is also the founder and editor of analogy magazine.

I've had statistically significant thoughts about the use of "statistically

significant" since the start of the 2020 'covid' psyop...

I've a statistically significant number of times i've reminded others that

Facts, and statistical facts, are not the same...

Once upon a time, I had a group of friends who loved to watch professional sports. They loved to bet on games, and they based their wagers on the statistical likelihood of one team beating the other. Before making each bet, they had fun spending hours analyzing the statistics amassed about each player to supplement their calculation of which team they thought was most likely to win. I used to ask them why they put so much faith in analyzing all that data. "Numbers don't lie, dude." They said. At the time, I thought it was a stupid, if funny and harmless, way of overvaluing statistics; that is, until years later when covid came along, and they all believed the projected numbers being thrown at us about how many people would die from this deadly new germ; how many lives would be saved if we locked down now for X number of months; how many percentage points your risk of infection would be reduced if you wore a mask; and suddenly their religious devotion to numbers didn't seem so funny and harmless anymore. What is it about statistics that bring out the true believer in people who otherwise make fun of religion? Is it as simple as a false sense of security? An easy workaround to critical thinking? Mass obedience to covid tyranny was creepy enough as it is, but it showed the dark side of people's unquestioning devotion to numbers and their inability to see how obviously manipulated or outright falsified statistics were being used to persuade them to do things that put their health, and their lives, at risk.